How to transform a Proof Of Concept application to a Software As A Service product

This book will guide you step-by-step to build a scalable product from a proof of concept to production-ready SAAS. Any development done will start from a business need: this will make things clear for the team what is the impact of the delivery.

You will see different architecture patterns for separation of concerns and why some of them fit well and some of them not. Everything will happen incrementally and the product development history will be easy to be seen by analyzing commits.

Every chapter is a progressive journey with some specific challenges that will be overcome by specific programming best practices.

This can be considered as a .NET tutorial, that demonstrates the versatility of the platform to create different types of applications (CLI, Desktop & Website).

To have our finished product, we will see the two development directions:

You can find the code source at https://github.com/ignatandrei/console_to_saas

You can read online this book at https://ignatandrei.github.io/console_to_saas/

You can read it offline by downloading the

Also, if you want to support us, you can buy this book from Amazon https://www.amazon.com/dp/B08N56SD4D .

You can find the source code at Console2SAAS or after each chapter.

Each chapter has the following steps:

The application will be a simple one: starting from multiple word documents we will build a summarizing Excel file. The point here is not to build the application 100% production-ready, but our focus will be on code organization and to discuss the common problems while progressing on this journey.

Please share feedback by opening an issue or pull request at https://github.com/ignatandrei/console_to_saas/

Andrei Ignat - http://msprogrammer.serviciipeweb.ro/

Has more then 20 year programming experience. He started from VB3, passed via plain old ASP and a former C# Microsoft Most Valuable Professional (MVP). He is also a consultant, author, speaker, www.adces.ro community leader and http://www.asp.net/ moderator.

You can ask him any .NET related question – he will be glad to answer – if he knows the answer. If not, he will learn.

Daniel Tila - is a systems engineer master degree in system architecture and 10+ years of experience. He worked on airplane engine software, train security doors to bank payment and printing systems.

He has more than 10 years of experinece in the .NET programming environment. He designed from application that are facilitating communication between bank, printing services to airplaine engines. How, he is a certified trainer in UiPath and co-founder at Automation Pill, a company that helps organization to optimize their costs by automating repetitive, mundane tasks.

Thanks goes to these wonderful people (emoji key):

Daniel Costea 🖋 |

This project follows the all-contributors specification. Contributions of any kind welcome!

True story: one day my boss came and he asked me to discuss how can we help our colleagues from the financial department since they are thinking about the automation process. We found a spare place and scheduled a meeting where we find out that 3 employees were manually extracting the client information (name, identity information, address) and contract agreement details (fee, payment schedule) to an Excel file and saving the document in a protected area.

Make an application that reads all word documents from a folder, parses, and outputs the contents to Excel. Create and make it work, the easy way. You can find sample data in the folder data in the GitHub project.

After putting some brainstorming and analyzing the constraint we ended up developing a solution that reads from a folder share and continues the process without any human intervention. This would allow us to have all our employees to continue their work simultaneously with less effort than before. The final setup was:

We wrapped up everything in the same project, just to make sure it works and to keep our focus on delivering. We put everything in the main file of the console app, and we have chosen C# because we were the most comfortable and were able to fulfill our requirements in a minimum amount of time.

string folderWithWordDocs = Console.ReadLine();

//omitted code for clarity

foreach (string file in Directory.GetFiles(folderWithWordDocs, "*.docx"))

{

//omitted code for clarity

XWPFDocument document = new XWPFDocument(File.OpenRead(file));

var contractorDetails = ExactContractorDetails(document.Tables[0]);

allContractors.Add(contractorDetails);

}We were facing resolving the solution just with a list of sequences of operations without any control flow involved. Great!

How to create a menu for the console app - https://github.com/migueldeicaza/gui.cs

When reading files on the hard disk, you should understand if you want to enumerate all files or just those that match a path. Read about the difference between IEnumerable and array (e.g. Directory.EnumerateFiles vs Directory.GetFiles) - https://www.codeproject.com/Articles/832189/List-vs-IEnumerable-vs-IQueryable-vs-ICollection-v

We installed our console app on one of the computers of an employee in the financial department, we set it up and we let it run. After a few days, we had some bug fixing and some new requests that came in. We saw an enthusiastic behavior on our colleagues by using our solution and that was our first real feedback that we were resolving a customer pain. We anticipated that our product will not be perfect (so more feedback will be considered) and to do tracking of what we did, we set up needed a source control. After a few iterations, we had our financial department happy and we are ready with our first MVP (minimum viable product).

Try to refactor the solution from Chapter 1 to extract the business logic (i.e. transforming Word files into an Excel file) into a separate file.

As a starting point, we decided to go beyond a separate file, hence we ended up to have 2 separate projects:

We decide to have this approach because it keeps a well balanced effort between value and time, and allows the flexibility to ensure separation of concerns. We went with creating the WordContractExtractor class, where we handle all the Word related file formats. Here we moved the usage of the NPOI library and we make sure that the expected format is according to our requirements.

Hence, our solution looks now like:

This is a simple and long term solution because it is not a time-waster and offers us the possibility to adapt later to possible changes. Moreover using this technique early in the process, helps to isolate the problem better. You have less code to look in one place, and the problems are isolated.

To help the versioning we added a source control. We have chosen GIT since it is simple and allows us to work without an internet connection.

var wordExtractor = new WordContractExtractor(folderWithWordDocs);

wordExtractor.ExtractToFile(excelResultsFile);Now, all we need to do is to use the class and pass the needed parameters. In this case, the file path is the only dependent requirement. This path can come from a config file or from a user pick action. The only check we could add is to make sure it is writeable. We can add this check before executing the program or we can just handle separately the exception and display a message to the user. We will see that later in the next chapters.

What is version control: https://en.wikipedia.org/wiki/Version_control

Create a free version control repository at http://github.com/, https://azure.microsoft.com/en-us/services/devops/, or other online source control.

Read more about how you improve the design of existing code by refactoring: https://www.amazon.com/Refactoring-Improving-Design-Existing-Code/dp/0201485672

Sharing code source + settings for client

It has been a few months since our financial department is using the application and they were happy with it. On a morning coffee break, where we were discussing the new automation trends, and among them, our tool was mentioned. The sales department was willing to see it in action, since they had themselves some similar situation.

We configured and deployed on a separate machine for them and we saw great feedback and real usage on a daily basis. They were so excited to see how they decreased the paperwork hours and had more time to pay attention to the client's needs. They want to use the application as an example of automation and we find it more suitable as product development.

Make a Console solution that can read a configurable setting (the folder path to be searched) from a file instead of being hard-coded into the application. Do not over-engineer the solution. Create and make it work, the easy way.

Creating a configuration file with all the settings that a client can particularly change. For now, we have just the directory path, along with the config file. We have added a class that manages the settings operation and use it in a typed format to have the config related settings in one single place.

The reason that we put the setting into a configuration file and not make 2 applications is to have an easy way to maintain the same application for 2 clients. The impact on the solution is minimal. Just create a new class that knows how to read from a config file and then retrieve the name of the folder as a property.

How an application can have multiple configuration sources (environment, command line, configuration file, code - in this order) - Read about .NET Core endpoint configuration (https://docs.microsoft.com/en-us/aspnet/core/fundamentals/servers/kestrel?view=aspnetcore-2.2)

Hot reload the configuration: read IOptionSnapshot and IOptionMonitor at https://docs.microsoft.com/en-us/aspnet/core/fundamentals/configuration/options?view=aspnetcore-3.1

Making a Graphical User Interface

At our Digital Transformation Center Department, we have recurring meetings with clients that are part of our continuous iterative process to identify things that need to be improved. In one of the meetups that we hosted on our desk, we invited the financial department to leave feedback on the process that we helped. We talked about how the process evolved and how, by adopting Digital Solutions, we decreased the manual work.

The presentation ended up with some Q&A and with several clients who were interested to have the application installed. At that point, we realized that this is a missing point in the current market and we planned to develop a product around it. At this point, we had a validated idea by a consistent number of users and we decided to evolve based on the client's needs.

Selling the application to multiple clients means to have configurable the word extracting and location of the documents. We ended up creating a GUI to allow the user alone to configure the application with the automation parameters. This was a valuable feature for our users since most of them may be non-technical. Transfer the ownership of the paths from the config file to a more appropriate user interface.

Modify the existing solution to support both Console and GUI.

For both applications, we have the same functionality: reading files from the hard disk and reading settings. We do not want to copy-paste code and become unmaintainable, so we want to share source code from the console application to the GUI application.

Because the user works now with network folders, that cannot be always available, we need to perform some modifications to alert the user about the problems. In our case we have the user interface for:

From a solution with a single project, we get now a solution with 3 projects:

The BL contains the code that was previously in the console application (loading settings, doing extraction) + some new code (logging, others).

The business logic is now simpler:

public void ExtractToFile(string excelFileOutput)

{

string[] files = Directory.GetFiles(_documentLocation, "*.docx");

_logger.Info($"processing {files.Length} word documents");

//code omitted for brevityThe Console code now is very simple, just calling code from BL:

var settings = Settings.From("app.json");

var extractor = new WordContractExtractor(settings.DocumentsLocation);

extractor.Start();The Desktop is calling the same BL, with some increased feedback for the user:

try

{

string folderWithWordDocs = folderPath.Text;

if (string.IsNullOrWhiteSpace(folderWithWordDocs))

{

MessageBox.Show("please choose a folder");

return;

}

string excelResultsFile = "Contractors.xlsx";

var wordExtractor = new WordContractExtractor(folderWithWordDocs);

wordExtractor.ExtractToFile(excelResultsFile);

}

catch(Exception ex)

{

_logger.Error(ex,$"exception in {nameof(StartButton_Click)}");

MessageBox.Show("an error occured. See the log file for details");

}Logging: https://nlog-project.org/ , https://serilog.net/

.NET Core 3.0 Windows application (WPF, WinForms): https://docs.microsoft.com/en-us/dotnet/core/whats-new/dotnet-core-3-0

MultiTier architecture: https://en.wikipedia.org/wiki/Multitier_architecture

Componentization / Testing / Refactoring

A client who was interested has mentioned that they were using zip files for backup the documents for company service. They were interested in having our product, but we don't support zip files. We shared our vision to create a highly customizable product, so implementing reading files from zipping would be an additional feature. He agreed to pay for it and we started the product development.

Starting from the solution, add support for reading either from a folder, either from a zip file.

Changing the business core requires refactoring, which usually involves regression testing. Before every refactoring is started, we need to define the supported test scenarios from the business perspective. This is a list that usually includes inputs, appropriate action, and the expected output. The easiest way to achieve this is to add unit tests which do the checking automatically.

By altering pretty much the entire core adds some regression risks. To compensate for that, we will add tests (unit and/or integration and/or component and/or system) which verifies the (already) old behavior and the new one. The tests will make sure that our core (reading and parsing) outputs the expected output using various file systems. We will add a new project for unit/component test and we will reference the business core project and inject different components (in our case, a file system). This core modification will also impact other projects, including GUI and console applications. In our case, we want to make sure that the contract parsing succeeds if we use both file systems.

We already identified the operations that need to be abstract, so let’s write the interfaces that allow this:

Our core program needs to:

These two operations are part of the actual business flow. We need to abstract the finding operation and reading from a file operation and allow different implementations.

One implementation is from the actual file system (an existing directory) and the other one from the zip archive, hence:

A zip file can have inside folders and files, similar to a directory. Hence, we can do the same operations just like in the actual file system (listing files and reading a file from it).

var settings = Settings.From("app.json");

var fileSystem = settings.FileSystemProvider.CurrentFileSystem();

var extractor = new WordContractExtractor(fileSystem);

extractor.Start();Download code

There are a few issues that you could encounter transforming this code example into production. The WordContractExtractor depends on the IFileSystem. The latter one is the state (it keeps the source files). While it is fine to have a state which is read-only (multiple requests will not alter the state), you could have concurrency problems if the files are written there. In this case, the IFileSystem has only methods for reading. Your homework is to modify the WordContractExtractor to be consistent and to use the IFileSystem for writing.

Your task is to:

Ecosystem / Versioning / CI

Our second client has different types of contracts that need to be considered. Having 2 clients that need to run the same code, but to work in a different way is a challenging task. Thus, we could address this problem using two approaches:

There are two approaches to this problem: create separate branches for each client or use a single branch and make the product behave differently.

So, we have our main code in master, and we will create a separate branch for each client. This is an easy task, but let’s see what are the consequences:

Advantages

Problems

Another solution is to have a single code branch, but this means to design the application to allow enabling/disabling features by configuration.

Advantages

Problems

When a client reports a bug, we must know the code that was compiled to be distributed to the client. For this, we should somehow mark the source code before the application distributable to be built - and this can be done by tagging or by branches in the code source. Also, we can have the application reporting the version of the components.

Dealing with multiple clients adds complexity that must be handled, and there are several challenges:

These challenges we could tackle easier if we could have a central point. Moreover, transforming this project into a SAAS could allow the activation of a subscription model for each individual client.

Create a website that allows us to import a zip file with multiple documents and output the resulting Excel.

Create a new web project and use the existing functionality in the ContractExtractor project. The application must be converted to a web application (eventually, a mobile application should be ready also). The web application should allow to manage multiple clients and to be multi-tenant enabled.

An authentication mechanism should allow having one or more ways to identify the users (either username + password, either via a third-party provider, like Google / Facebook, either via an integrated provider, like Azure Active Directory / Okta). If you want to know how you can setup such a mechanism, please read:

The zip files can be uploaded and processed on the web. But, for the local files, the architecture should be somehow different: You may think about an agent that works on local, monitors the local file system, and uploads data to the web in order to be processed. This will allow us to have access to local resources and processes on the web.

The most code will belong to the HTML and the backend infrastructure.

<div class="text-center">

<h1 class="display-4">Welcome to extractor!</h1>

Please upload your zip file with documents

<form asp-controller="Home" asp-action="UploadFile" method="post"

enctype="multipart/form-data">

<input type="file" name="file" id="fileToSubmit" />

<input type="submit" value="Generate Summary" />

</form>

</div>The business layer code, if well written, will be mostly the same

var fullPathFile = Path.Combine(_environment.WebRootPath, Path.GetFileName(file.FileName));

if (System.IO.File.Exists(fullPathFile))

System.IO.File.Delete(fullPathFile);

using (var stream = new FileStream(fullPathFile, FileMode.Create))

{

await file.CopyToAsync(stream);

}

var fileSystem = new ZipFileSystem(fullPathFile);

var extractor = new WordContractExtractor(fileSystem);

extractor.Start();Now, all we need to do is to use the class and pass the needed parameters. In this case, the file path is the only dependent requirement. This path can come from a config file or from a user pick action. The only check we could add is to make sure it is writeable. We can add this check before executing the program or we can just handle separately the thrown exception and display to the user a message. We will see that later in the next chapters.

A web project is composed of two parts: The view part (which is what the user sees) The backend (which prepares the data and make possible the actions) These two are implemented and rendered at different levels: the view part is rendered in the user browser and can contain any logic that makes sense here (simplest validation, animations), and the backend is what resides on the webserver and processes the request(security, validations, others) from the user.

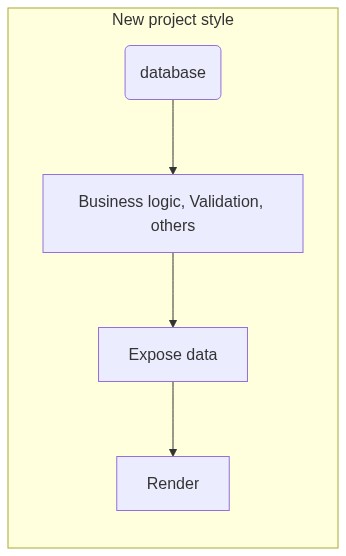

Previously, we had the same parts in the same project, and the flow of the data it was looking like in this diagram:

A modern SAAS project contains at least 2 projects, and it splits the 2 parts in 2 different projects: one for data retrieval (the backend) displays the data on the various GUI ( browser, desktop, mobile - frontend). This is not mandatory, but it’s a powerful mechanism to have smooth and responsive applications. In this case, the diagram looks like:

A big advantage of keeping the project separate, is that we can link another source that renders the data and displays it in a different source: mobile projects

An application is multi-tenant if it can serve multiple clients at the same time. A single tenant can only serve one client. Modern applications are designed as multi-tenant because they are easier to maintain and scale. For all the advantages and disadvantages please see this link - https://en.wikipedia.org/wiki/Multitenancy. The choice between these will impact (at least) the construction of the website and the database structure.

The current project structure goes to a monolithic approach: you enhance a web application rich enough to perform multiple tasks. This will be hard to handle multiple clients since you need to have one single server for all the requests. This scaling is named vertical scaling and it is the one way of application operating modes. You can monitor the load of the server and make multiple different servers assigned to different clients (multi-tenant). The new approach is to scale vertically: you split the application by features (named microservices), and each of these services runs on a smaller machine. It is easy to scale by features (individual microservice) from the application perspective (one feature could be more heavily used than others), and from the cloud hosting perspective. How do you split There is not the best practice to split, it depends on the use case, but there are 2 popular splitting approaches

However, we encourage to learn more by going through a selection of resources:

You may need to link with other services when an event in your application happens (contract is parsed, contract summary is created). This is a problem usually resolved by adopting an event bus architecture (see microservices). While this is not the best way to solve this problem production-ready (it has a lot of security breaches), you could create a service that exposes just some of these events. One functionality that you may like to implement is to hook to a service which creates a notification when your contract has been created. There are many cloud providers that allow this like IFTTT or Zapier. One implementation method is using WebHooks and for .NET please see https://docs.microsoft.com/en-us/aspnet/webhooks/

Having continuous integration helps to minimize the time to discover code errors. This is useful when the team has any size since you can establish in the integration systems some quality controls rules that need to be passed after each developer does a code modification. The typical steps in a CI system are:

There are many CI/CD systems (that you can run on your computer or in the cloud) such as:

Continuous delivery is usually done on a high-risk environment (UAT) and the deployment can be done after each commit of timed based (daily). For production deployment, there are many strategies on how you can do that and is really depending on the target quality and user dynamics. The most known deployment strategies are:

This book is trying to reproduce as real as possible a use case to get from a prototype to a software as a solution service. This story is not real but is based on our experience and difficulties that we had during this time. The book focuses on following a process and showing some tools to achieve the deliverable and not about implementing the best code practices.

There are more things to consider to have this kind of application in production. We can mention load testing, profiling, and optimization but they are out of the scope of this book.

While we did our best to make this book as useful as possible and if you think this can be improved, we would highly appreciate your feedback and accept pull requests on GitHub at https://github.com/ignatandrei/console_to_saas/actions.

We hoped you have enjoyed reading as much as we enjoyed writing. If you need help with your software implementations, you can reach us for consultancy services at:

Good luck in your projects!